Issue 37, Winter/Spring 2024

https://doi.org/10.70090/MH24RVSP

Abstract

The integration of artificial intelligence (AI) into media has rendered it crucial to comprehend public acceptance of this technology. The four comprehensive models developed and tested in this research employ structural equation modelling, which offers a thorough analysis of the factors influencing public acceptance of AI in media. The research relies on a survey that was conducted within Egypt and Saudi Arabia that resulted in a sample of 540 individuals. A novel theoretical model, which integrated the technology acceptance model (TAM) with the virtual social attendance scale, was developed to clarify factors that influence the public's acceptance of AI in media. The current study provides in-depth knowledge and unique findings regarding elements that influence public acceptance of AI in media, which correspondingly highlight a need for further research to better understand this emerging technology.

Introduction

In the context of social presence theory, virtual social presence is a pivotal concept for understanding interactions in virtual environments. These types of environments may include the internet, video games, and virtual reality. Social presence is described by Garrison, Anderson, and Archer (2000) and more recently by Garrison (2017) as the salience of others across various media that highlight the importance of social presence in promoting meaningful online interactions. Enhanced virtual social presence significantly boosts the frequency of online interactions, which creates more immersive and engaging experiences for users (Tu and McIsaac 2002; Jin 2012; Jung 2011). The core premise of social presence theory posits that the sensation of being with others in a shared virtual space profoundly influences both the experience and behavior of users. This presence facilitates mutual understanding among communicators via content of messages, emotional states, intentions, and objectives, which lead to mutual behavioral and emotional adaptations (Mechant 2012; Li 2010). Consequently, the level of social presence not only effects the quality of interactions, but also influences the audience as it pertains to selecting different media for specific tasks. This underscores the pivotal role of social presence in virtual communication dynamics.

Artificial Intelligence (AI) is a primary driving force in the contemporary scientific and technological revolution as it profound influences social development, human productivity, and societal dynamics (Birtchnell 2018). The term Artificial Intelligence was initially coined in 1956 by computer scientist John McCarthy. Since that time, AI has evolved by incorporating technologies that enable automatic pattern recognition and predictive analytics, as well as augmenting human cognitive capacities (Broussard et al. 2019). In 2020, the global AI industry reached a market size of 62.35 billion USD with anticipated contributions to the global economy at 15.7 trillion USD by 2030 (Sun, Hu, and Wu 2024). AI transformative potential is particularly evident in digital media, where innovations such as deep learning have revolutionized visual, speech, and natural language processing (Chesney and Citron 2019).

The integration of AI into the media landscape has ushered in a new era that has disrupted traditional news production, distribution, and consumption systems. As such, there are profound implications for industry dynamics (Xie 2021). Journalism is inherently intertwined with technological evolution. Accordingly, this industry has witnessed a surge in AI adoption as newsrooms across the global are integrating automated AI tools in editing processes (Abdulmajeed and Fahmy 2022). AI algorithms have become instrumental in generating news articles and summarizing scientific data for media institutions, such as The Associated Press and Forbes (Henestrosa, Greving, and Kimmerle 2023). The convergence of AI and journalism has prompted major transformations in the media landscape, which has been driven in part by technology giants such as Netflix, Amazon, Apple, Google, and Facebook. This convergence has reshaped audience behavior, distribution platforms, content strategies, and business models (Chan-Olmsted 2019). As a result of these tremendous changes, it is necessary to explore public perceptions of AI integration into journalism.

Academic research within the Arab context lacks insight into user preferences for automated versus human-written news (Abdulmajeed and Fahmy 2022). Arab studies on AI in journalism tend to focus on journalists and overlook audience perceptions, which signals a need for investigation (Musa and Abdulfattah 2020). The digitization of news production and the adoption of AI technologies has revolutionized media production and communication, which significantly impacts culture and society (Song 2022). However, the rapid growth of AI usage in Arab media remains in its nascent stages, which signals a need for further exploration (Fahmy and Attia 2020). Arabic-speaking news media face additional challenges due to a language-based divide, which hinder the adoption of AI tools (Ali and Hassoun 2019). While the adoption of AI in Arab media is still in its early stages, there is growing interest in research and development within this field (Fahmy and Attia 2020). This highlights the need to conduct a comprehensive study of Arab public perceptions as it pertains to the integration of AI in newsrooms. Consequently, the current study aims to address questions posed by academics and journalists regarding Arab public perceptions involving the application of AI technologies in media. This was achieved through an examination of the Arab public's virtual presence on their acceptance of AI technology in media practices. In practical terms, media organizations should consider audience concerns and fears.

Literature Review

The First Variable: Virtual Social Presence

Jin and Youn (2023) examined consumer behavior as it relates to AI-powered chatbots, particularly the impact of social presence. They suggest that deeper examination of the underlying mechanisms and potential moderating factors could provide insight into user engagement and product perception. Oh et al. (2023) highlight the influence of social presence on supportive interactions and the reduction of loneliness among young users. They emphasize the need to explore precise factors by addressing confounding variables and sample representativeness to enhance the generalizability of their findings.

Cummings and Wertz (2022) contribute to the theory of social presence by proposing a precise definition, which includes clarification of operationalization and measurement. They recommend a critical engagement with the existing body of literature to enhance conceptual clarity. Oh, Bailenson, and Welch (2018) explore social presence within virtual reality interactions. They emphasize the need for critical engagement with theoretical frameworks and empirical evidence, as well as point out the importance of ecological validity and ethical considerations in virtual interactions. Guo, Jin, and Qi (2023) explore how social presence influences the intention to share information during public crises, which highlights the importance of moderating and mediating factors that affect the relationship between social presence, risk perception, and consistency of opinion climates. Similarly, Kaya et al. (2024) found that greater knowledge, and a harmonious relationship with AI, correlate with more positive attitudes towards AI.

The Second Variable: Acceptance of Artificial Intelligence

Research into AI adoption reveals various factors influence public acceptance as it pertains to media. Choung, David, and Ross (2023) highlight trust and ethical compliance as critical determinants of AI acceptance. Stein et al. (2024) found that younger individuals tend to have more positive attitudes towards AI. Schepman and Rodway (2023) found correlations between personality traits and attitudes toward AI, which suggests that personality influences AI acceptance. However, a deeper understanding of these predictors is essential for effective policy and practice. Kieslich, Keller, and Starke (2022) emphasized the importance of ethical considerations in fostering public acceptance. Kim et al. (2023) associates public concern regarding AI with the spread of misleading AI-generated information, biases, and privacy issues. Joud Alkhalifah et al. (2024) highlighted growing concerns regarding the future of humanity in an AI-dominated era, which identifies the need for ethical AI frameworks.

The integration of AI into everyday life requires attention to both technical benefits and societal risks. Weber et al. (2024) found that increased AI knowledge reduces fear but does not completely alleviate concerns as it pertains to job replacement, which leads to hesitance in AI adoption. Furthermore, Gesk and Leyer (2022) noted a general preference for AI solutions in public services while emphasizing the importance of maintaining human involvement in certain domains. Herschel and Miori (2017) emphasize the importance of addressing these dual aspects. Kieslich, Lünich, and Marcinkowski (2021) call for extensive surveys to gauge public perceptions and inform future research.

The impact of AI on media and journalism has been widely studied. Song (2022) proposed optimizing AI news communication systems, which raised questions regarding the effects on journalistic integrity and public trust. Lu (2021) discussed the transformative impact of AI on news production and its implications for audience experience and content quality. Hu, Meng, and Zhang (2019) examined the impact of AI on journalism and content production while demonstrating societal implications. Devaraj, Easley, and Crant (2008) explore the relationship between personality traits and AI adoption attitudes, which revealed complex interactions within the Technology Acceptance Model (TAM).

Theoretical Framework

This research aims at developing a theoretical framework by integrating the Technology Acceptance Model (TAM) with the virtual social presence scale. This done with the aim to analyze audience acceptance of AI in media. While the TAM model usually relies upon two main dimensions—the perceived usefulness and perceived ease of use—this research only utilized the perceived usefulness dimension. Perceived ease of use was not addressed because if falls outside of the framework of the current study, which is to assess the impact of the perceived usefulness of AI on audience acceptance. Therefore, ease of use was disregarded because it focuses more on outcomes and benefits as it pertains to the audience using AI.

Indeed, the use of the virtual social presence scale, the digital competence scale, the AI anxiety scale, and the current knowledge of AI scale provided an effective framework to comprehend the dynamics of audience virtual interaction with technology. Accordingly, the theoretical analysis in this research is based on the development of the TAM model, which emphasized perceived usefulness as a key component of AI acceptance in media.

Research Method

The current research relies on the scale as the instrument of the study. Several previous studies related to the employment of AI in media were reviewed to prepare instruments for the current research. Modifications have been made to identified scales to better suit the variables of the current study, particularly within the Arab environment. Thus, the following scales were prepared.

Operationalization of the Virtual Social Presence Scale

First, the four-item virtual social presence scale was prepared in the light of previous studies. Minor modifications were made to fit this media research, particularly as it relates to Arab participants from Egypt and Saudi Arabia. The following phrase was slightly modified, which is ‘the digital environment allows me “to interact freely without time or place limitations so it reduces…[my] artificial intelligence anxiety”’ (Almaiah et al. 2022, 10). The modification was made to better fit the nature of the digital environment, as well as to adapt to specific cultural and social circumstances, with a focus on the effect of virtual social presence reducing anxiety associated with AI.

Also, the following phrase was adapted from Cummings and Wertz (2022), which is ‘there is a feeling of other humans interacting with the individual’. The modification maintained the idea of social presence while emphasizing the context of virtual media. In addition, the two phrases of ‘I can easily communicate with other participants in different virtual communication environments’ and ‘I am familiar with non-verbal communication elements in the virtual environment such as emojis’ were adapted from research conducted by Hew et al. (2022). The phrases were modified to fit virtual interaction in digital media.

Operationalization of the Existing Knowledge of AI Scale

Second, the five-item current knowledge of AI scale was prepared in the light of previous studies. Minor modifications were made to fit the Arab media context. The phrase of ‘I am very interested in AI’ was adopted from Kieslich, Keller, and Starke (2022). The phrase was modified to suit public interest in the uses of AI in the media. Three other phrases were derived from the studies of Sun, Hu, and Wu (2024) These phrases include, ‘Have you subscribed to a service that uses AI technology such as (chat GPT, Google Translate, Google, Netflix, Facebook)’, ‘I am familiar with how AI is applied in journalism and media, such as (data analysis, automated reporting, video and photo editing, identifying audience interests)’, and ‘I understand the basic logic of employing AI in journalism and media’. These phrases were modified to suit the needs of the current study as it pertains to audience understanding of how AI is used in digital media.

Operationalization of the Perceived Usefulness Scale

Third, the eight-item perceived usefulness scale was prepared based on the study of Sun, Hu, and Wu (2024). The scale assesses the extent that individuals perceive the benefits AI can offer in media. Adapted statements, addressing the assessments of AI’s ability to enhance the effectiveness of journalism, were ‘AI will be a major boost to journalism and media,’ ‘AI can improve the accuracy of news content,’ and ‘AI can enhance the objectivity of news content.’ The scale also included assessments that pertain to improving the attractiveness and credibility of news content, as well as its ability to detect fake news and rumors. This scale aimed to understand how AI can contribute to improving the quality of media, the user’s experience, and raising the level of audience trust in the content.

Operationalization of the Artificial Intelligence Anxiety Scale

Fourth, the seven-item AI anxiety scale was derived from the study of Li and Huang (2020) to assess anxiety levels associated with AI. The adapted statements included measures of anxiety resulting from the use of AI, such as concerns regarding the collection of personal information, discrimination, and anxiety about AI decisions. This scale aimed to explore the extent these concerns effect individuals and how they deal with AI technology in their daily lives.

Operationalization of the Digital Competence Scale

Fifth, the fourteen-item digital competence scale was adapted from the study of Rodríguez-Moreno et al. (2021) to assess a participant’s familiarity with digital tools and social networks. The adapted statements focused on measuring a student’s digital competence while using digital media and communication tools during the COVID-19 pandemic. These statements helped assess the participant’s skills in navigating digital technology as well as various applications, which include familiarity with digital tools to achieve academic and professional goals.

Operationalization of the Artificial Intelligence Acceptance in Media Scale

Sixth, the eight-item acceptance of AI in media scale was derived from the study of Sun, Hu, and Wu (2024) to assess the participant’s attitude towards use of AI in media. The adapted statements identify the extent of a participant’s acceptance of AI in improving media production, which includes automatic writing of reports, video editing, image editing, and identifying audience interests. The scale includes an assessment of the expected benefits of AI and its ability to enhance accuracy and objectivity. It also assessed the impact of AI on improving the user experience and increasing audience engagement.

The modifications were reviewed and verified for their appropriateness as it relates to local, social, and cultural context. These evaluations were conducted by seven professors in the field of media and information systems, as well as three experts in the field of AI. Evaluators also verified the comprehensiveness of the scale, the accuracy of measuring current knowledge of AI, and the ease of understanding within the Arab audience. Based on these expert reviews, a scale consisting of eighty items was constructed. The items of the scale were extracted from the analysis of data, which was acquired from fifty respondents. Evaluators recommended removing 34 items due to repetitive wording. These modifications enhanced the validity of the results.

Data Collection

To enhance the generalizability of the findings, data was collected through an internet-based survey that was made available in Egypt and Saudi Arabia. The survey instrument was predominantly developed, disseminated, and administered using online methodologies. The survey was hosted on a publicly available survey platform for ease of access—https://forms.gle/FTVcP86XHBX6gG7x7—to facilitate completion by respondents. Participants were instructed to indicate their level of agreement with each questionnaire item. A total of 540 valid responses were collected from individuals that comprise diverse demographic profiles.

In terms of ethical considerations, strict steps were taken to ensure the protection of privacy and confidentiality of respondents and their data. The aims of the study were explicitly explained to all participants and informed consent was obtained before they responded to the questionnaire. Additionally, all data collected through Google Forms was protected and no information related to the identity of the participants was collected.

Manipulation Checks

At the beginning of the survey, a single attention check was used to screen out participants who did not meet the eligibility criteria, which follows the methodology established by Egelman and Peer (2015). Additionally, after completing the survey, participants were asked to assess their familiarity with algorithms using a rating scale ranging from one through five, with one indicating no knowledge at all and five denoting expert knowledge of algorithms. Participants were then presented with an open-ended question, which read ‘In your own words, please briefly explain your understanding of algorithms.’ The responses indicate that participants perceived algorithms as entities capable of autonomous decision-making.

Hypotheses

The Technology Acceptance Model (TAM) and other predictive frameworks are utilized to forecast an individual’s intentions to embrace a particular technology. Performance expectancy served as a crucial predictor, which compared to other constructs such as perceived usefulness (Venkatesh et al. 2003). Based on the TAM, the study aimed to test the following hypotheses:

- Virtual social presence has a significant positive and direct impact on the acceptance of AI in Media.

- Existing knowledge of AI significantly influences the acceptance of AI in Media.

- Perceived usefulness significantly affects the acceptance of AI in Media.

- Artificial intelligence anxiety significantly influences the acceptance of AI in Media.

- Digital competence significantly impacts the acceptance of AI in Media.

- Gender moderates the relationship between virtual social presence and acceptance of AI in Media, with males demonstrating higher acceptance when compared to females.

- Age moderates the relationship between virtual social presence and acceptance of AI in Media.

- The effect of virtual social presence on acceptance is greater among Saudis compared to Egyptians.

- The effect of virtual social presence on acceptance is greater among holders of higher and intermediate qualifications.

Data Analysis

In this present study, partial least squares-structural equation modelling (PLS-SEM) was utilized to conduct an analysis of the conceptual model. According to the recommendations of scholars in the field, PLS is the most developed and general system for variance-based structural equation modelling (Sarstedt, Ringle, and Hair 2021). We chose to implement PLS path modelling because it has been widely utilized in management and other related fields. PLS path modelling was selected as an appropriate investigative strategy, which was conducted using SmartPLS 3 software. This was selected as the objective of this investigation was to predict the dependent variable based on an explanatory approach. According to MacKenzie and Podsakoff (2012), the single-factor test developed by Harman was utilized to explore common method bias (CMB). The fraction of the component's variation was explained by the common factor, which was 16.08 percent. This is less than fifty percent, which indicates the potential problem was not present.

Results

Measurement Model Assessment

For this study the measurement model, internal consistency reliability, convergent reliability, and discriminant validity were utilized. These methods were proposed by previous studies. According to Fornell and Larcker (1981), researchers have established reliability indicators such as composite reliability (CR) with a minimum value of 0.6. According to the data presented in Table 1, the reliability indicators of each construct for the current study were found to be more than the threshold value. This indicates that acceptable internal consistency was in all constructs. According to Sarstedt, Ringle, and Hair (2021), the outer loadings of each item for all constructs ought to be equal to or greater than 0.40. In the current study, all individual item loadings are above 0.4, which demonstrates the constructs have sufficient convergent validity (see Table 1).

Table 1: Measurement Model

| Construct | Item | Loading | t-value | P-value | CR |

| Cut-off | >0.4 | >1.96 | <0.05 | >0.6 | |

| Virtual social presence | VSP1 | 0.606 | 6.851 | <.000 | 0.637 |

| VSP2 | 0.406 | 3.532 | <.000 | ||

| VSP3 | 0.443 | 3.742 | <.000 | ||

| VSP4 | 0.478 | 4.098 | <.000 | ||

| VSP5 | 0.608 | 7.391 | <.000 | ||

| Existing Knowledge of AI | KNOW1 | 0.581 | 12.251 | <.000 | 0.731 |

| KNOW2 | 0.623 | 14.674 | <.000 | ||

| KNOW3 | 0.653 | 16.435 | <.000 | ||

| KNOW4 | 0.631 | 18.593 | <.000 | ||

| KNOW5 | 0.476 | 8.415 | <.000 | ||

| Perceived usefulness | PU1 | 0.573 | 17.017 | <.000 | 0.762 |

| PU2 | 0.524 | 13.721 | <.000 | ||

| PU3 | 0.476 | 10.863 | <.000 | ||

| PU4 | 0.629 | 18.843 | <.000 | ||

| PU5 | 0.417 | 6.95 | <.000 | ||

| PU6 | 0.55 | 13.574 | <.000 | ||

| PU7 | 0.559 | 13.257 | <.000 | ||

| PU8 | 0.541 | 13.417 | <.000 | ||

| Artificial intelligence anxiety | ANX1 | 0.501 | 6.631 | <.000 | 0.72 |

| ANX2 | 0.67 | 16.296 | <.000 | ||

| ANX3 | 0.68 | 14.796 | <.000 | ||

| ANX4 | 0.478 | 6.627 | <.000 | ||

| ANX5 | 0.496 | 6.584 | <.000 | ||

| ANX6 | 0.449 | 5.895 | <.000 | ||

| Digital Competence | DC1 | 0.531 | 15.057 | <.000 | 0.836 |

| DC2 | 0.579 | 16.704 | <.000 | ||

| DC3 | 0.556 | 16.008 | <.000 | ||

| DC4 | 0.536 | 13.624 | <.000 | ||

| DC5 | 0.412 | 9.103 | <.000 | ||

| DC6 | 0.432 | 9.946 | <.000 | ||

| DC7 | 0.568 | 18.053 | <.000 | ||

| DC8 | 0.528 | 15.397 | <.000 | ||

| DC9 | 0.567 | 16.93 | <.000 | ||

| DC10 | 0.584 | 17.209 | <.000 | ||

| DC11 | 0.535 | 15.114 | <.000 | ||

| DC12 | 0.445 | 10.564 | <.000 | ||

| DC13 | 0.608 | 20.77 | <.000 | ||

| Artificial Intelligence Acceptance in Media | ACC1 | 0.591 | 20.265 | <.000 | 0.752 |

| ACC2 | 0.44 | 9.192 | <.000 | ||

| ACC3 | 0.599 | 19.077 | <.000 | ||

| ACC4 | 0.501 | 11.371 | <.000 | ||

| ACC5 | 0.414 | 7.096 | <.000 | ||

| ACC6 | 0.417 | 7.841 | <.000 | ||

| ACC7 | 0.654 | 21.76 | <.000 | ||

| ACC8 | 0.562 | 14.884 | <.000 |

CR=composite reliability; References: (Fornell and Larcker 1981; Sarstedt, Ringle, and Hair 2021).

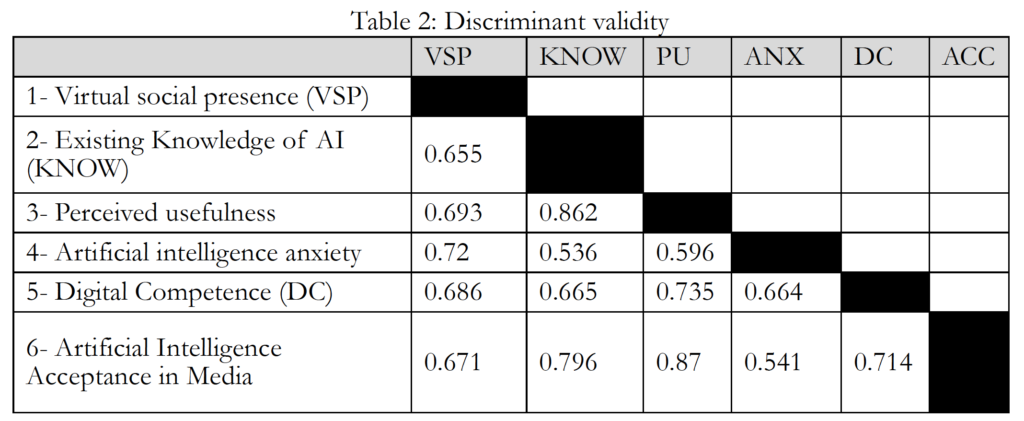

This research also relied on HTMT (Heterotrait-Monotrait) ratio to test for discriminant validity, which also verified validity issues. Gaskin, Godfrey, and Vance (2018) proposed the value of constructions should not exceed 1. The results in Table 2 indicate the greatest value of a construct found 0.87, which consequently established the discriminant validity.

Descriptive Statistics and Multiple Correlations

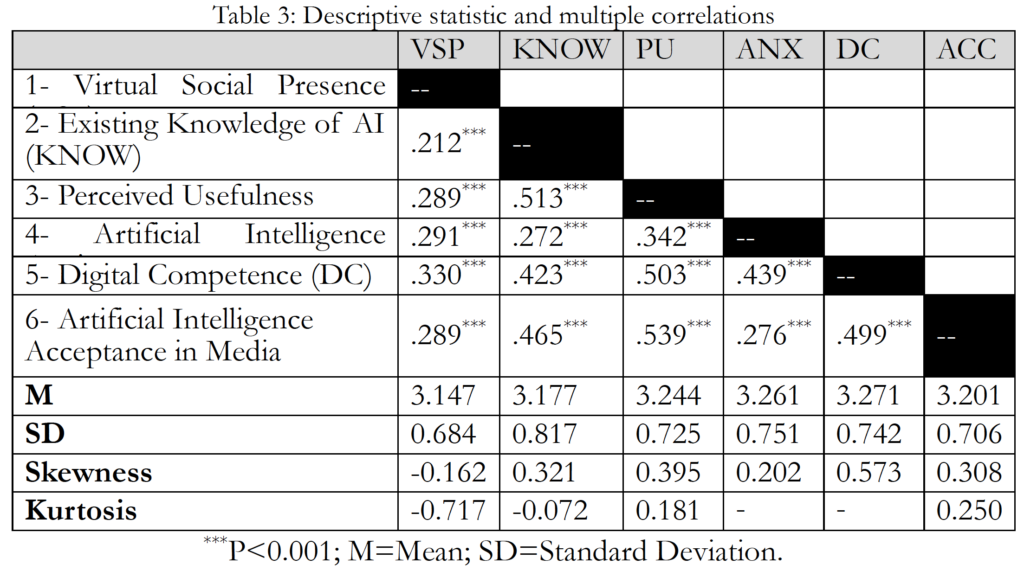

The purpose of this study was to provide descriptive statistics, as well as a variety of correlations between the various chosen constructs. In accordance with what is presented in Table 3, these include the mean (M) and the standard deviation (SD). DC has the highest mean (M=3.271), while VSP has the lowest mean (M= 3.147). However, VSP has the lowest variability (SD= 0.684), while KNOW has the highest variability (SD= 0.817). According to Hair et al. (2014) and Byrne (2016), skewness levels that range from -2 to +2 and kurtosis values that range from -7 to +7 are considered appropriate for demonstrating normal distribution among the data. The results shown in Table 3 demonstrate that the values of skewness and kurtosis for all constructs fell within the acceptable range.

The Pearson product-moment correlation coefficient was utilized to ascertain the extent of the link between the stated constructs, as well as the direction of the association. The results indicate that Artificial Intelligence Acceptance has a significant positive moderate relationship with Perceived Usefulness , a moderate relationship with Digital Competence , a moderate relationship with Existing Knowledge of AI , a weak relationship with Virtual Social Presence , and a weak relationship with Artificial intelligence Anxiety .

The Structural Model

Path coefficients, collinearity diagnostics, coefficient of determination (R2), effect size (f2), and predictive relevance (Q2) are used to analyze the structural model. Prior to assessing the structural model, the collinearity across constructs was explored (Table 4), which relied on variance inflation factors (VIF). All values were determined to be less than the threshold of five as stipulated by Sarstedt, Ringle, and Hair (2021). For the current experiment, two thousand bootstrapped samples were used to observe and evaluate the significance of path coefficients.

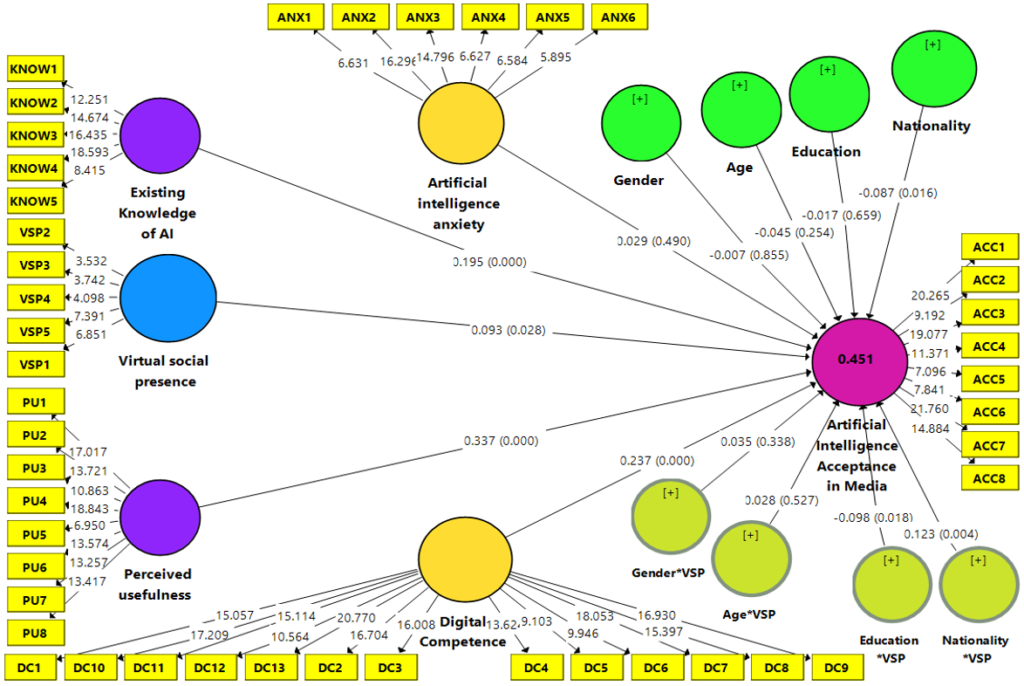

Figure 1: Structural model and hypothesis testing

Table 4: Path Coefficients and Hypothesis Testing

***P<0.001; **P<0.01; *P<0.05; ¥P<0.1; NS P>0.1. f2 thresholds: > 0.02 (weak effect)a; > 0.15 (moderate effect)b; > 0.35 (strong effect)c. R2 thresholds: > 0.1 (low)a; > 0.33 (moderate)b; > 0.67 (substaintial)c. VIF should be less than 5; Q Square should be greater than 0. Cut-off References: (Chin 1998); (Cohen 1988); (Falk and Miller 1992); (Wetzels et al. 2009); (Sarstedt, Ringle, and Hair 2021). |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The estimates of the structural equation modelling are shown in Figure 1. We developed four models for testing the effect on Artificial Intelligence Acceptance in Media. The first model has only Virtual Social Presence as an independent variable, which has a significant positive effect on Artificial Intelligence Acceptance with a small effect size . We added both Existing Knowledge of AI and Perceived Usefulness in the second model and observe a small and moderate effect respectively. Moreover, in the third model we added Artificial Intelligence Anxiety and Digital Competence and found that only Digital Competence has a significant positive effect on Artificial Intelligence Acceptance with small effect size . Furthermore, the final model reveals that Virtual Social Presence, Existing Knowledge of AI, Perceived Usefulness, and Digital Competence have a significant positive effect on Artificial Intelligence Acceptance. Additionally, Nationality has a significant negative effect on Artificial Intelligence Acceptance, which indicate that Saudis accept artificial intelligence more than Egyptians.

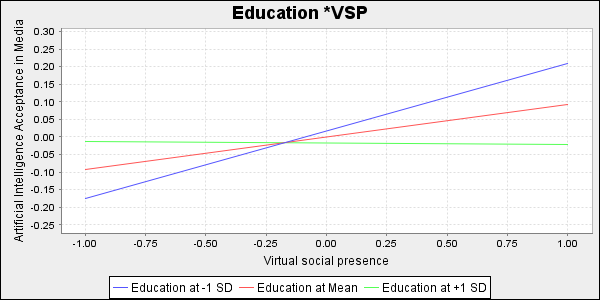

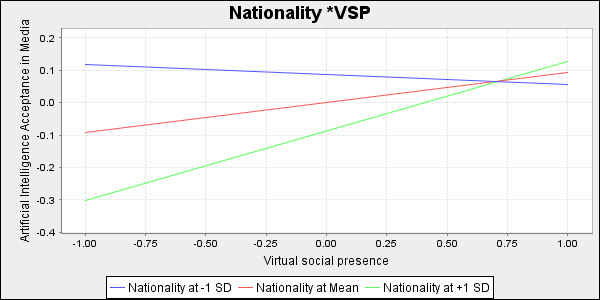

Figure 2: Interaction plot for the moderating role of Education on the relationship from VSP to ACC

Figure 3: Interaction plot for the moderating role of Education on the relationship from VSP to ACC

The moderator variable of Education has a statistically significant negative influence on the relationship between Virtual Social Presence and ACC . This indicates a relationship between Virtual Social Presence and ACC for postgraduates is weaker compared to those with middle or university degrees. Further, the moderator variable Nationality has statistically significant positive influence on the relationship between Virtual Social Presence and ACC . This indicates a relationship between Virtual Social Presence and ACC for Saudis is stronger compared to Egyptians. Coefficient of determination (R2) is calculated using the PLS-SEM structural model. In this study, all values were above the threshold of 0.10. The results for the final model indicated that 45 percent of the variation in Artificial Intelligence Acceptance could be explained by the variation in the independent variables. Also, cross-validated redundancy (Q2) was employed to assess the impact of latent variables. A value of Q2 larger than zero indicates the model has predictive relevance as stipulated by Sarstedt, Ringle, and Hair (2021). Table 4 shows the Q2 values for the current study are greater than zero. As a result, this model has predictive relevance.

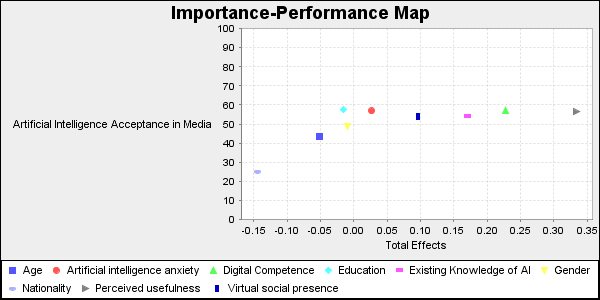

Importance Performance Map Analysis

Importance performance map analysis (IPMA) was utilized to provide additional insight by combining the importance (I) and performance (P) dimensions of analysis as stipulated by Ringle and Sarstedt (2016). IPMA enables the identification of places where action is necessary. Specifically, one may identify elements of the process that are relatively important, yet perform poorly, to deploy management methods that initiate change. Figure 4 depicts the constructs that influence the dependent variable Artificial Intelligence Acceptance in Media. The IPMA findings are displayed as a two-dimensional graph. The horizontal axis describing the importance (total effect) of influential factors on a scale of 0 to 1. The vertical axis describes their performance with a range of 0 to 100. Figure 4 show that Perceived Usefulness (0.333) was the most important construct, followed by Digital Competence (0.228), Existing Knowledge of AI (0.171), and Virtual Social Presence (0.096), among others. Moreover, Education performed the best (57.5 percent), followed by Digital Competence (57.09 percent), Artificial Intelligence Anxiety (56.849 percent), Perceived Usefulness (56.411 percent), Existing Knowledge of AI (54.375 percent), and Virtual Social Presence (54.006 percent), among others.

Figure 4: Importance performance map

Discussion and Conclusion

This research provides a comprehensive overview of the factors that meaningfully influence public acceptance of AI in media among Saudis and Egyptians. Four models were developed to test the impact of these factors as each demonstrates its unique effect on AI acceptance. Findings confirm that Virtual Social Presence positively and significantly influences public acceptance of AI among Saudis and Egyptians. This positive effect suggests that social relationships in virtual environments may contribute to individual acceptance and trust in smart technology.

The analysis began with the first model, which highlights virtual social presence as a key independent variable that significantly influences acceptance of AI in the media. Understanding how individuals interact with AI is not just a technical issue, but also depends on psychological and social factors. It appears that social interaction and cohesion through digital media have contributed to shaping public attitudes, and thus indicate the significance of social factors in adopting smart technology. This underscores the finding that suggests enhancing virtual social presence leads to improved user experience and increased acceptance of smart technology. This result has several practical implications. Social media platforms and virtual social interactions can effectively enhance public understanding and trust in AI. This knowledge can guide the design of future products and applications to ensure a better user experience and greater acceptance. However, there are several limitations and challenges to consider. The impact of Virtual Social Presence on the acceptance of AI may vary in different cultures or time periods. Therefore, these findings encourage further studies to comprehend how virtual social relationships can be exploited to enhance interaction with smart technology. Additionally, understanding the effects of other cultural and social factors on the acceptance of AI is crucial. These findings are consistent with multiple studies. More specifically, Oh et al. (2023) examined emotional factors in human-technology interaction, which identified the crucial role of emotions in human interactions with technology. This was particularly true in virtual worlds where emotions enhance social interactions among teenagers. Tsai et al. (2021) and Castro-González, Admoni, and Scassellati (2016) further highlight the importance of emotions and psychological effects in human interactions with robots, which underscores the heightened feelings of social presence.

Tu (2005) examined social presence in new media and found that individuals utilize media to perceive the presence of others and enhance social presence through online social networks. Biocca, Harms, and Burgoon (2003) provide a comprehensive assessment pertaining to the importance of social presence in new media and emphasize its role in facilitating user interaction. In addition, Kaya et al. (2024) investigated attitudes towards AI and technology usage, which revealed positive attitudes towards AI are associated with increased levels of computer usage and knowledge about AI. Conversely, negative situations may trigger anxiety and adoption hesitancy. By intertwining these strands of research, we gain insight into the emotional dynamics of the human-technology relationship, the importance of social presence in new media contexts, as well as how emotional and psychological factors influence attitudes toward AI and technology usage.

In the second model, we add both Existing Knowledge of AI and Perceived Usefulness to observe their impact on the Acceptance of AI. Given the rapid technological advancements in AI, familiarity with AI and integration in media would make the public more inclined to accept and embrace it. For example, if the public is aware that AI can solve complex problems more efficiently and quickly than humans and there is good media coverage explaining how AI is used to enhance media production processes or improve viewer experience, then they may be more receptive to it. When the public realizes the benefits that AI may provide to the media industry—such as improving accuracy in targeted advertising or enhancing viewer experience through personalized content recommendations—they might be more inclined to accept it. Clear understanding of the potential benefits of smart technology can enhance acceptance and positive engagement with it.

These findings highlight the importance of providing knowledge, raising awareness, and elucidating the benefits, and practical applications of AI to the public. Increased public awareness and understanding about AI can lead to increased trust and acceptance of AI technology in the media field. This result has several practical implications. Increased acceptance of smart technology in the media can lead to the development of more advanced and effective media solutions, which enhances the viewer’s experience and interaction with media. However, there are several limitations and challenges to consider. Difficulty may arise in determining effective methods to increase familiarity and understanding of AI among the public, especially with challenges related to effectively disseminating information and awareness. Therefore, these findings encourage future studies to measure how the current knowledge and understanding of AI effects audience behavior in media, explore effective future strategies to increase public knowledge and understanding of artificial intelligence, as well as evaluate their impact on public acceptance and adoption of smart technology in the media. Adding existing knowledge of AI and perceived usefulness appears to significantly enhance AI acceptance

Regarding the perceived Benefits of AI, Tavakoli et al. (2023) demonstrated a significant impact of increased acceptance of AI when individuals perceive benefits. Also, Lim and Zhang (2022) highlighted the role of perceived usefulness, ease of use, and addiction in driving actual usage of AI-supported platforms, which suggests the importance of incorporating these variables into acceptance models. Regarding Trust in AI, Choung, David, and Ross (2023) and Ng (2024) emphasized the significance of trust—especially in conversational AI applications—as pivotal in AI acceptance.

These results differ from other studies. Pelau et al. (2021) revealed doubts pertaining to AI among segments of the population that were influenced by technological advancements and media influencers, which underscores the importance of fact-checking to address skepticism. Concerns regarding privacy, cybersecurity, and connectivity associated with AI, as well as the Internet of Things (IoT) were raised by Makori (2017), Cox, Pinfield, and Rutter (2019), and Fernandez (2015), which suggests the need for careful consideration of ethical implications. Providing additional Insights, Gillath et al. (2020) emphasized the role of egalitarian values and attachment anxiety in predicting negative attitudes toward AI. Stein et al. (2024) found slightly positive attitudes related to AI among participants, which suggests a potential to shift attitudes through increased exposure to AI. Latikka et al. (2021) highlighted the potential for attitude changes towards AI following repeated encounters with the technology. Leyer and Schneider (2021) raised concerns pertaining to AI transparency, as well as its impact on human control and trust. Logg et al. (2019) indicated public openness to AI acceptance, albeit with simultaneous concerns about its applications. Thus, these studies illustrate the multidimensional nature of public attitudes towards AI and comprehending these different variables is crucial to foster responsible integration into society. As such, perceived benefits and trust contributing to acceptance, skepticism, and ethical considerations must be addressed to ensure the positive impact of AI in media.

In the third model, we add both AI anxiety and digital competence, which demonstrated that only digital competence has a positive and significant effect on the acceptance of AI. Notably, anxiety about the application of AI in media did not have a notable effect on public acceptance of the technology. This may be due to variations in the level of anxiety among individuals or the lack of expected impact of anxiety on their behavior and response. Conversely, individuals with a high level of digital proficiency and ability to use technology effectively may be more inclined to accept and interact with AI in media. Digital proficiency skills can lead to increased confidence in using and relying on smart technology in media.

These results point to several practical implications. Digital competence plays an important role in public acceptance of AI in media, which indicates the importance of developing individual skills in digital technology. These findings can be used to design educational and training strategies that are aimed at enhancing digital competence and increasing acceptance of smart technology in media. Therefore, these findings encourage future studies aimed at determining a precise understanding of the factors that influence public acceptance of AI in media, which should include a deeper analysis of the relationship between digital competence and other psychological factors—such as trust and anxiety. It is also possible to explore the impact of awareness and education strategies on understanding and accepting smart technology in media, which should include the role of awareness campaigns and training in increasing public awareness and understanding. This is consistent with studies conducted by Orešković et al. (2023), which emphasized the critical role of understanding AI technologies in shaping attitudes towards the technology. More specifically, higher levels of AI knowledge correlated with more positive views towards AI-enabled technologies, which indicates the influence of knowledge level on attitudes towards AI.

Examining concerns and anxiety regarding AI, Rhee and Rhee (2019) highlight dual feelings of hope and concern regarding AI advancements, while many experience anxiety about the potential risks associated with AI. Weber et al. (2024) demonstrated that fear surrounding AI is intricately linked to understanding of AI as fear escalated in individuals who exhibited low or moderate knowledge of AI. However, the intention to engage with AI technologies rises in correlations with greater AI knowledge, but this is tempered by apprehensions regarding AI replacing humans. Kaya et al. (2024) delved into negative aspects pertaining to attitudes toward AI, which highlighted the role of personality traits, anxiety related to AI, and demographic factors in shaping attitudes toward AI technology.

Kim et al. (2023) examined anxiety stemming from misinformation and privacy apprehensions and posited that anxiety about AI is fueled by the dissemination of misinformation, biases, and privacy concerns. These findings underscore the complex interplay between knowledge, concerns, and attitudes towards AI. Joud Alkhalifah et al. (2024) examined existential anxiety and future trajectory concerns and their contribution to the broader discourse on concerns surrounding AI. This research delved into the overarching anxiety surrounding the future trajectory of humanity while shedding light on the existential concerns associated with AI advancements.

The relationship between Virtual Social Presence and the acceptance of AI is stronger among Saudis when compared to Egyptians. This disparity is likely a result of different customs, values, and attitudes that are influenced by cultural, social, and economic factors in each respective country. This could also be a result of advanced development of smart technology, as well as the ambitious marketing and communication campaigns pertaining to these developments, in Saudi Arabia when compared to Egypt. Further, differing economic factors within each respective country may wield an influence. This observation highlights the need for further research to understand the influence of economic factors and technological infrastructure on the future adoption of smart technology.

The relationship between Virtual Social Presence and AI acceptance is weaker among individuals with higher education degrees than those with middle or college degrees. This may be due to the differing needs and preferences of each respective group. Those with higher qualifications likely have higher expectations and more demanding criteria for smart technology, as well as different preferences and experiences while using and understanding it. Additionally, young people at various educational stages exhibit higher proclivity for integration or interaction within virtual environments when compared to those with master's and doctoral degrees. This difference is due to the higher level of awareness among the former group when compared to the other groups. These findings have practical implications for marketing and awareness campaigns. Specifically, there is a need to design strategies that ensure positive responses to smart technology, particularly among individuals with higher education degrees. However, there are limitations and challenges, such as identifying the factors that weaken this relationship among more educated individuals. Further studies are encouraged to better understand the effects of experience and personal preferences on interaction with smart technology. Additionally, it is recommended to develop customized strategies to increase acceptance and adoption of smart technology in media among this group.

The findings from our research demonstrates that education higher levels did not significantly predict attitudes toward AI. This contradicts previous research that suggests individuals with higher levels of education tend to hold more positive attitudes towards AI (Gnambs and Appel 2019; Zhang and Dafoe 2019). Further, Masayuki (2016) observed a greater prevalence of positive attitudes towards AI among employees in companies with higher educational backgrounds when compared to those with lower educational backgrounds.

Gender and Attitudes Towards AI

Our research indicates that gender is not a predictor of attitudes towards AI. This contradicts previous findings that suggest males exhibit more positive attitudes towards AI technologies when compared to females (Fietta et al. 2022; Schepman and Rodway 2023; Sindermann et al. 2020; Sindermann et al. 2021). McClure (2017) revealed demographic and sociocultural factors influence AI acceptance. More specifically, concerns were more prevalent among the elderly and individuals with lower education levels. Additionally, Liang and Lee (2017) noted that women typically hold more negative attitudes towards AI when compared to men, which may potentially be due to societal barriers that limit women’s access to and interest in technical fields.

Age and Attitudes towards AI

In some studies age did not predict attitudes towards AI. Chocarro, Cortiñas, and Marcos-Matás (2021) found the intention to use technology did not increase with age among teachers adopting chatbots. Park et al. (2022) stated that older individuals display a higher acceptance of technologies powered by AI and tend to accept new technologies, which was likely a result of a proclivity to stay current with technology and not wanting to be perceived as outdated. Gillespie, Lockey, and Curtis (2021) indicated that younger individuals hold more positive attitudes towards AI. Stein et al. (2024) found that younger individuals are more likely to accept AI, which suggests a correlation between trust and age.

Conclusion

Perceived usefulness emerged as the most important factor pertaining to acceptance of AI, which indicates that identifying tangible and perceived benefits of using smart technology can significantly influence willingness to accept and adopt it. Digital competence ranked second, which suggests that ability to engage with digital technology plays a key role in AI acceptance. Although current knowledge of AI was less important than perceived usefulness and digital competence, it still played a role in the acceptance of smart technology. Increasing knowledge about AI may enhance confidence in its use, thereby increasing acceptance levels. Virtual Social Presence appeared to be less important, but it still has a role that influences acceptance of AI. Interaction and communication in the virtual environment can lead to increased acceptance of smart technology. Results also demonstrate that education and AI anxiety were important factors regarding acceptance of AI. Finally, more education and reduced AI anxiety could lead to greater acceptance and adoption of AI technology.

Results demonstrate that perceived usefulness of AI has a significant impact on the acceptance of technology in media. This suggests the public is willing to adopt AI in media whenever they have a clear understanding of its benefits. In addition, the Virtual Social Presence scale reveals that digital social interaction helps to enhance acceptance. This indicates that interactions with smart technologies are more positive when they feel that there are others in virtual environments.

In practical terms, media organizations can improve public acceptance of AI by promoting the benefits of this technology. Recent research, such as Cardaş-Răduţa (2024), suggest that AI can contribute to increasing the effectiveness of journalism in multiple ways. For example, media organizations can improve many aspects of content production, distribution, and consumption by integrating AI into these processes. More precisely, AI can perform predictive analytics on audience preferences, which help media organizations target and drive content that users desire. This may correspondingly increase user engagement with the content. AI also helps in classifying media data and automatically linking different contents, which makes it easier for media organizations to identify appropriate content and present it in an organized manner. Further, AI media consumption analysis provides media organizations with accurate data on audience behavior, which helps improve future editorial processes that identify and produce content that meets the needs of the audience; this process simultaneously enhances the user experience. Furthermore, these findings provide insight to policymakers on the importance of adopting balanced strategies when introducing AI technologies into the media. It is essential to develop policies that protect privacy rights and ensure transparency regarding AI decision-making processes, which may correspondingly enhance public trust and reduce concerns associated with this technology. Policymakers should also consider setting standards for the use of AI that ensures fairness and impartiality.

Arab Media & Society The Arab Media Hub

Arab Media & Society The Arab Media Hub